Hi folks! We are back with an exciting new post in Maho’s corner that is dedicated to explaining what exactly AI is. You’ve probably seen a lot about AI in the news, on your phone, or even in conversations recently. That being said, there’s still a ton of mystery around it and, for starters, it’s not the simplest of subjects to broach. There seems to be never ending drama between tech bros like Sam Altman and China’s Deepseek, Nvidia stock going through the roof, and a sort of uncomfortableness, yet amazement, at some of the tasks the larger models like OpenAI’s ChatGPT and Antrhopic’s Claude are able to perform. So, if you’re new to all of this or simply trying to gain a better understanding of what exactly AI is, then I have great news for you; you’ve come to the right place.

I will take a moment to say that if you’re looking to really dive deeper into the technical side of how AI works at a fundamental level, I would highly recommend reading my 3 part series on Machine Learning and Neural Networks: (Machine Learning Part One, Machine Learning Part Two, and Machine Learning Part Three). These posts cover how models are fine tuned to correctly identify and predict correct responses. If you’re not interested in diving too deep, that’s okay, as we won’t be exploring the overly complicated parts of AI in this post, because as the title implies, this article is intended for anyone and everyone to gain a sense of what AI is and to become a little bit more knowledgeable on the subject.

Okay, so let’s get started! Similar to posts I’ve shared in the past on various subjects, I like to start with the basics. For starters, AI stands for Artificial Intelligence. But, what exactly does that mean? How can intelligence be artificial? Well, like most machines and software that we use today, it involves some complicated math and a computer that is able to compile some code into something that works.

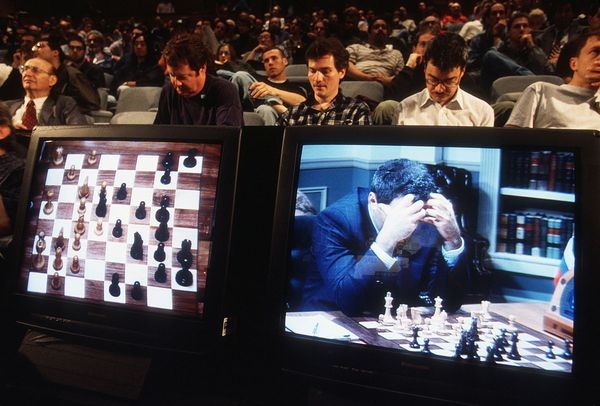

Now, for something to be “intelligent” that means it has to be able to perform some version of a task by gathering information presented and to take an action that best aligns to the desired outcome. This isn’t a new concept as almost all tasks require some level of intelligence, whether it’s a baby crawling to their mother or a software engineer writing code. Granted, there are varying degrees of difficulty. As for the field of AI and the concept of artificializing intelligence, this isn’t necessarily a new subject either. Depending on how old you are or what you were taught in history, a lot of folks considered IBM’s Deep Blue system defeating a world chess champion in 1997 as the first real show of what could be possible with AI, and that was almost 30 years ago!

So, where does this intelligence come from? We know AI is composed of various computers and machines, but how does AI know how to accomplish a task? Well, let’s start with the easiest and most common use case that you may be familiar with. I’m referring to AI chat messaging and prompting. If you’ve used ChatGPT, Claude, Google’s Gemini, DeepSeek, etc. before, you’ve probably typed in a question to the chat section and received some form of a response, ideally the answer you were hoping for. When these services initially came out, they struggled slightly to produce correct responses, however over the last 2 years, there has been significant progress.

Okay okay, but we still haven’t covered how intelligence is happening. The intelligence component is thanks to a technique known as tokenization and encoding that maps to what we’ll call contextual transformer layers. The transformer layers are the masterminds behind determining how to filter potential responses and are similar to how we define neural networks (this is covered in my machine learning and neural network posts). You can think of neural networks as an attempted replication of how a human’s brain works with all the various neurons connected together.

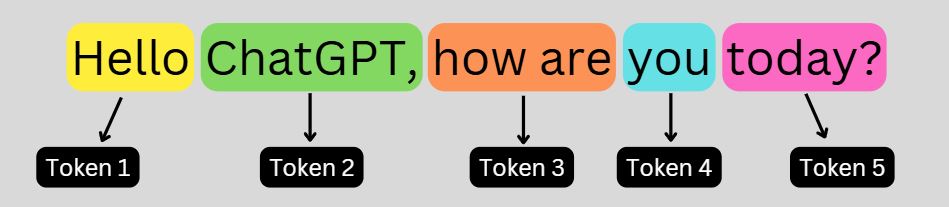

The tokenization and encoding piece refers to how your message is received and broken up into bits for the AI to interpret. I’m going to simplify this a bit, but essentially every word or collection of letters and or phrases you input into the prompt gets broken up into tokens. That means if you typed something like “Hello ChatGPT, how are you today?”, these would be broken into tokens– “Hello” = token1, “ChatGPT” = token2, “how are” = token 3, “you” = token4, and “today?” = token5. In some cases, where a string of words doesn't have much impact, such as a verb with a single subject – how are”-- it will be treated as a single token because it doesn’t require as much effort to interpret. The encoding piece just refers to a data structure algorithm of simplifying texts into cut up sections, aka multiple tokens.

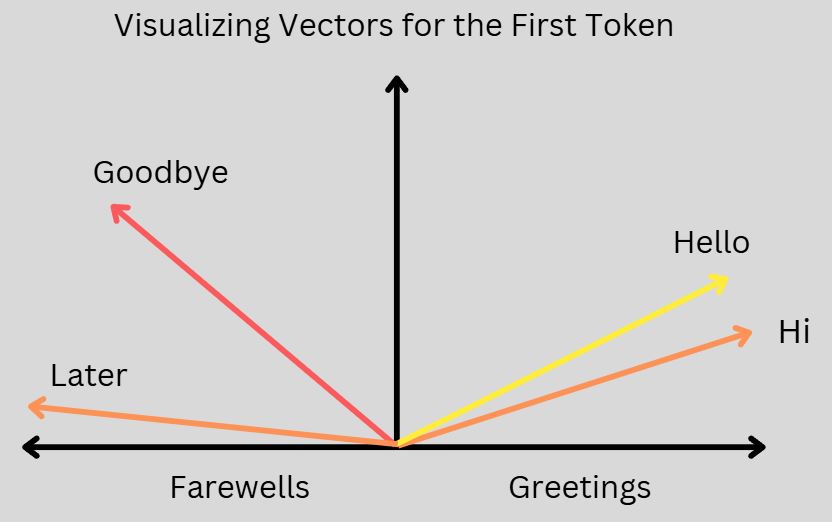

So, the reason your input message gets broken into multiple tokens is because each token can then be analyzed and used to determine the math behind what the correct response should be. If you ever asked in math class growing up, why are we learning this? Well, it’s because whoever the AI engineer in your class was, needed to know the basic algebra that turned into calculus, then some even more complex math, and eventually used that to apply a math thing called vectors to tokens.

Basically, by representing a single word with a token, AI can use that to determine how a token relates to all the others in the whole input sequence, and then use the fancy math to determine what is the intention of the word being there, and then weight the responses by likelihood and relevance. This allows AI to capture dependencies across words regardless of position. Then, to make sure it’s following your train of thought, AI uses the token order, since it lacks inherent sequence awareness, to preserve word order.

For reference, if you ask Claude what is a vector in simplified terms as it relates to tokenization, I got this response: Think of words like points on a map of meaning. When you say "happy," "joyful," and "delighted," these words are like neighbors living very close to each other on this meaning map because they're similar. But "sad" would be far away from them, like on the opposite side of town. When you type a message to AI, it turns your words into these map coordinates (that's what we call vectors). So if you ask "How was your day?" the AI knows this question sits close to friendly, casual conversation on the meaning map. But if you ask "What's the square root of 144?" that question lives in a totally different neighborhood - over in the math district! It's like the AI has a giant map of all possible conversations, and your message is giving it directions to the right neighborhood where it should look for an appropriate response.

If it’s still not completely clicking, we’re going to break down our example just a bit further. Let’s take “Hello ChatGPT, how are you today?”. We inspect token1 “Hello”. We know that “Hello” is considered a greeting and in most cases an appropriate response would be something similar in return such as: hello, hey, what’s up, how’s it going, etc. In this case, AI is going to use a vector to align token1 to a “Greetings” category. That’s what Claude was referring to when it said “casual conversation map”. Our second token, “ChatGPT”, tells the AI that we are referring to a noun, specifically AI in this case and it is a directive towards the AI. Conversely, if ChatGPT was named Fred, and you said Hello Fred, it would interpret that as “Fred” being a reference to a specific person or whoever you are directing the hello at.

We arrive at Token3–“how are” will be associated with the adverb category as seeking to further understand something. This means that at this point the AI can expect that it will receive a follow up prompt specifying something the user is seeking to further understand. Now, when we get to token4, “you”, it is at this point we are specifying the noun and the directive of our ask up to this point. The "you” vector will most likely point towards the response category of feelings. Note that since AI is a computer and artificial, it does not have feelings, unless you explicitly tell it to pretend that it does. In that case, it will best guess the correct feelings to have using what we’ve talked about up to this point. Finally, we get to token5–“today?”. Today is most likely interpreted as looking to understand current events. AI will point it towards the current state category and do its best to assess the status of the now.

If you string all of this together you get the most basic of questions humans use to interact with one another all the time. Though, when input into an AI chat prompt, the AI will use the above strategy to intake and analyze the most appropriate response it should provide to you. As you can see, it is much more complicated for AI to produce a response to a basic greeting than it would be for you or I if asked the same question.

So, in summary, the intelligence component of AI is the engineering behind the scenes, that uses data models to predict what the most likely correct answer is, based on the input you provided. Then, since intelligence involves continuous learning, when you tell the AI it is wrong or not the answer you’re looking for, it will take that feedback and slightly adjust the complex math to better predict the correct answer the next time (this is related to a technique called backpropagation, which is covered in the neural networks blog). The artificial piece of AI is simply referring to the computer and machines part of this, as the intelligence is artificially created through complex math and computers.

Everything we’ve covered today makes up the very basics of how AI functions at an extremely simplified level. If you are curious about AI and want to learn more about the subject, I would highly recommend reading some of the published research papers from the companies I mentioned in the beginning. YouTube and other free courseware sites offer basic intro courses to familiarize yourself with key concepts. And finally, of course, you can reach out to me, as I will most likely post a few more times about AI, in particular topics that interest me. Thanks again for reading and hopefully you learned something new!